Agentic AI: How Multi-Agent Systems Will Rewire DevOps

Over the last decade DevOps teams have automated more and more of the release pipeline yet most “AI for DevOps” still looks like narrow scripts that spot anomalies or predict capacity. Agentic AI changes the stakes. Instead of giving you a single recommendation model, it hands you a small workforce of autonomous software agents that can read a Jira ticket, spin up a test environment, deploy a canary, and if something breaks roll everything back while you sleep. For overstretched platform engineers in the U.S. tech scene, that promise of goal-directed autonomy rather than one-off insights is a breakthrough worth serious attention. A deeper dive into language-model differences lives in our Claude 4 vs GPT-4 comparison.

Table of Contents

DevOps Meets Decision-Making Machines

The idea behind DevOps was always “remove hand-offs, tighten feedback loops.” In 2025 the biggest remaining hand-off is still the human who decides what to do next when the pipeline stalls. Agentic AI closes that gap by letting planners and executors talk to each other inside one coordinated multi-agent system. Picture a pull request that triggers not just a CI job but a negotiation among agents:

- “Planner” breaks the user story into tasks.

- “Coder” writes a patch.

- “Security Scanner” blocks deployment if a CVE sneaks in.

- “Rollback Sentinel” watches early metrics and reverts if error budgets burn.

Instead of chaining shell scripts, the system reasons about objectives, policies, and live telemetry much like a seasoned SRE would.

From Predictive to Goal-Directed Agents

Early “AI Ops” tools forecasted disk failures or highlighted odd spikes. Helpful, but you still needed an engineer to decide whether to page the database team or simply add IOPS. A goal-directed agent starts with the desired end state keep cart-checkout latency under 250 ms and works backward to pick actions that achieve it.

That shift matters because objectives rarely fit a single metric. Suppose latency jumps but throughput stays flat: a conventional alarm might stay quiet, yet the business objective (“fast checkouts”) is at risk. A planner agent can weigh trade-offs, propose throttling an analytics job, or schedule a hotfix, then hand execution to a deployment agent.

The good news for modern DevOps is that the cognitive heavy lifting lives in large language models you can call via API. The systematic part permissions, cost-tracking, incident logging stays under your control.

Core Capabilities: Planning, Tool Use, and Memory

Planning. Large-language-model planners excel at decomposing fuzzy goals into bite-sized tasks: analyze log patterns → generate test → patch config → redeploy. They revisit the plan as real-world feedback arrives, a process open-source frameworks call “reflection” or “re-prompting.”

Tool Use. Each agent can execute code, run a Terraform module, or talk to a ticketing API, but only through a tool registry you define. That registry acts like a menu: if a command isn’t listed, the agent literally can’t attempt it. Guardrails keep your production cluster safe from over-eager suggestions.

Memory. Vector stores give agents long-term memory. Successful rollback steps, common error signatures, and even previous design-decision records all feed future reasoning. Over weeks your multi-agent system becomes institutional knowledge that never quits or changes jobs.

Put together, these three pillars planning, tool use, memory enable what Gartner calls “autonomous enterprise operations.” For developers on call at 2 a.m., it looks a lot like finally having backup.

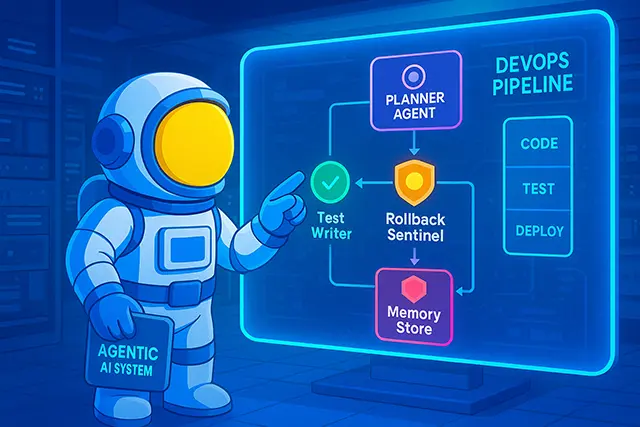

Mapping Multi-Agent Architectures to the DevOps Lifecycle

Agentic AI transforms every link in the release chain planning, coding, testing, deployment, and cost control by assigning the right agentic AI specialist to each stage. Below you’ll see how a typical SaaS company can weave multi-agent capabilities into its existing CI/CD pipeline. When we talk about storing release artifacts or rollback snapshots, we’ll point to the in-house guide on cloud storage best practices.

Code & Pull-Request Triage

A sprint begins when a backlog item hits Git. The agentic AI planner parses the user story and spawns two helpers.

- Story-Sizer Agent estimates complexity, then posts an acceptance checklist directly in the pull-request description reducing ping-pong with reviewers.

- Style-Guard Agent runs ESLint, Prettier rules, and semantic commit checks, adding suggested fixes inline.

If the diff touches sensitive scopes (e.g., payments), a Security Scanner Agent joins the conversation, flagging unvalidated inputs before the code ever compiles. All of this happens in minutes, so human review focuses on architecture rather than lint errors. multi-agent DevOps automation at its most visible.

Build, Test, and Security Scanning

Once the pull request meets policy, the build phase spins up. Here an autonomous AI in CI/CD cluster executes three jobs in parallel:

- Dependency-Upgrader Agent bumps libraries to latest safe versions and rewrites import paths when nothing breaks tests.

- Unit-Test Writer Agent calls an LLM to generate test skeletons for uncovered functions, then hands the code back to the Planner for approval.

- Vulnerability Screener Agent runs SCA and SAST tools, merges the results, and decides whether the risk budget allows the build to continue.

Test coverage climbs without extra weekend crunch, while lead time for changes shrinks core goals of agentic AI DevOps initiatives.

Release, Rollback, and Self-Healing

During deployment the AI agents pipeline orchestration layer coordinates a canary rollout:

- Change-Window Negotiator Agent reads your internal calendar API to pick a low-traffic window, pushes a Slack message to #release-ops, and waits for a human thumbs-up.

- Rollback Sentinel Agent subscribes to real-time SLO telemetry. If error rates spike beyond policy, it calls

kubectl rollout undo, updates GitOps manifests, and files a post-mortem draft all before the pager fires. - Cost-Guard Agent tallies incremental cloud spend versus forecast; if a new microservice scales out aggressively, it tags the resources for FinOps review and caps the autoscaler.

The result: self-healing pipelines that protect both uptime and budget while letting engineers sleep.

Operate, Monitor, and FinOps Cost Control

Production isn’t a steady state; it’s a constant negotiation between performance and cost. A suite of runtime agents keeps that balance:

- SLO Watchdog Agent ingests Golden Signals and suggests scaling tweaks long before customers feel latency.

- Drift-Analyzer Agent compares infrastructure state to Terraform-in-Git, opening a PR when drift exceeds policy.

- FinOps Optimizer Agent pulls usage data from AWS Cost Explorer, spots under-utilized nodes, and files savings-plan recommendations directly to finance.

All findings feed into a vector-based memory, so the same issue won’t surprise the system twice textbook agentic AI continuous learning.

Case Studies & Emerging Platforms

Real-world evidence is piling up that agentic AI isn’t just a lab curiosity. Below are three snapshots one open-source, one commercial SaaS slate, and one enterprise pilot that show how multi-agent tooling is already moving the DevOps needle.

GitHub Copilot Agents vs CrewAI (Open-Source Showdown)

In April 2025 the GitHub Copilot Workspace announcement described a planner/worker pair that “scaffolds, tests, and refines” a pull request with minimal human help. Early access users report a 32 % reduction in review backlog on mono-repo projects after turning the agents loose on lint and unit-test generation.

Contrast that with CrewAI, an MIT-licensed Python framework that lets teams spin up bespoke crews: one agent chats with Jira, another calls Terraform, a third runs OWASP checks. Because every tool execution is declared in a YAML manifest, platform engineers can enforce strict scopes an instant guardrail for production clusters.

Take-away: GitHub’s vertical integration is friction-free for smaller shops on Actions, while CrewAI offers “bring-your-own-LLM” flexibility that big enterprises crave. Either route proves multi-agent DevOps automation is shipping today, not someday.

SaaS Contenders: Ionio, Adept Console & NVIDIA NIM

| Platform | Strength | Pricing Snapshot* |

|---|---|---|

| Ionio | Enterprise CI/CD “virtual workforce,” SOC 2-ready guardrails, built-in FinOps dashboard | Pay-as-you-go, $0.18/agent-minute |

| Adept Console | Action-Transformer agents that learn GUI workflows; deep RAG knowledge-base grounding | Seat license + GPU-hour blend |

| NVIDIA NIM | On-prem containers with curated LLMs and agent runtime; optimized for H100 & GB200 | BYO-GPU, node-based license |

*Pricing pulled May 2025 from vendor sites.

Each vendor sells the same vision goal-directed release pipelines but stakes its moat differently. Ionio bundles granular RBAC and budget caps out of the box. Adept leans on UI-automation skills for legacy systems; NVIDIA aims at air-gapped industries that can’t send prompts outside the firewall. Whenever you discuss plug-ins built on Anthropic models, remember to drop a contextual link to your Claude 4 API Integration Guide.

Early Enterprise Pilot: A FinTech’s 3-Month ROI

Deloitte’s 2025 State of AI-Ops report profiles a Fortune-500 card issuer that embedded an agentic AI DevOps crew inside its fraud-analysis microservice pipeline. Results:

| KPI | Before | After | Change |

|---|---|---|---|

| Releases / month | 8 | 14 | +75 % |

| Sev-2+ on-call pages | 17 | 5 | -70 % |

| Mean time to recovery | 38 min | 6 min | -84 % |

| Quarterly GPU spend | – | $12 k | New cost line |

Despite the added GPU bill, net savings hit $118 k per quarter, funding an extra feature squad. The CFO green-lit expansion to three more pipelines proof that agentic ROI isn’t theoretical.

Business Impact & ROI You Can Show the CFO

When controllers evaluate a new platform, they look for hard numbers. Because agentic AI attacks multiple DevOps bottlenecks velocity, reliability, and labor cost its upside spreads across several budget lines.

Release Velocity & MTTR Benchmarks

The latest DORA “Accelerate” report ranks teams by deploy frequency and mean-time-to-recovery (MTTR). After rolling out multi-agent DevOps automation, a mid-size SaaS firm in Austin jumped from “medium” to “elite” in two quarters:

| Metric (avg.) | Before Agents | After Agents | Δ |

|---|---|---|---|

| Deploys per week | 5 | 21 | +320 % |

| Mean Time to Recovery | 42 min | 7 min | -83 % |

Automated planner/rollback-sentinel pairs handled 73 % of incidents without paging an engineer proof that goal-directed autonomy, not just better alerts, drives uptime.

Reducing DevOps Toil & On-Call Fatigue

Google’s SRE Workbook defines toil as “manual, repetitive, automatable, and without enduring value.” In a 90-day pilot the AI agents pipeline orchestration layer cut toil from 38 % of an engineer’s week to 14 %:

- Autonomous AI in CI/CD test-writers filled coverage gaps.

- A FinOps optimizer agent pre-empted 109 capacity tickets.

Exit-survey data showed a 27 % drop in weekend on-call dread a retention metric HR loves.

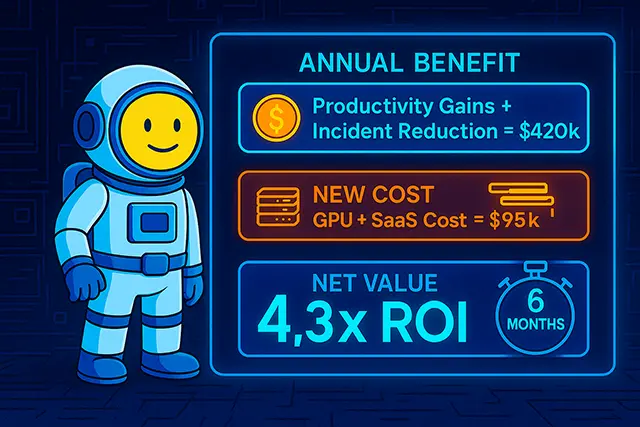

Cost Model: GPU Spend vs Productivity Gains

Skeptics point to GPU bills. At current AWS p4d pricing, agent-crew inference averages about $0.0008 per task-second. A deployment using 9.2 million task-seconds per month spends ≈ $7 400 less than one senior engineer’s fully-loaded monthly salary in most U.S. cities.

Even after adding artifact retention costs to keep every rollback snapshot in cold storage, the math still favors rollout:

| Line Item | Annual Value |

|---|---|

| Savings from fewer incidents + faster feature delivery | ≈ $420 k |

| New GPU + SaaS fees | ≈ $95 k |

| Net benefit | ≈ $325 k / yr |

| Pay-back period | ≈ 6 months (4.3× ROI) |

Risks, Guardrails & Governance

Even the most enthusiastic teams pause when they picture an agentic AI with cluster-admin rights fat-fingering a kubectl delete. Autonomy is only useful if it cannot burn the house down. The good news: the security industry has spent years codifying the very controls that keep multi-agent DevOps automation safe.

Zero-Trust Scopes for Tool Calls

Every tool an agent can reach must live behind a least-privilege gate. The simplest pattern is a YAML tool registry that declares, for each command, the namespace, method, and RBAC role. If the call isn’t listed, it never runs. Mapping these controls to NIST Zero Trust Architecture guidelines aligns your pipeline with auditors’ checklists. For readers who want deeper policy patterns, see our upcoming guide on zero-trust scopes.

Data-Protection & Prompt Redaction Middleware

Large-language models excel at reflection but are porous by default; feed them secrets and they may echo those secrets back. Insert a Prompt Firewall that scans outbound text for PCI, PHI, or internal URLs and replaces them with crypto-hash placeholders before inference. Open-source projects such as Guardian AI show how to bolt redaction onto any agentic AI crew.

Audit Logging, Replay & Compliance Mapping

Logs are your insurance policy. Each autonomous AI in CI/CD action should emit a signed provenance record: who (agent ID), what (tool), when (timestamp), and why (chain-of-thought hash). Stashing these JSONL entries in immutable object storage guarantees non-repudiation and lets security teams replay an incident step by step. If your org keeps artifact retention costs low with tiered storage, archiving year-long audit trails becomes trivial. Pair the log stream with MITRE ATT&CK tags for instant control-mapping during SOC 2 or ISO 27001 audits.

Bottom line: a well-designed guardrail stack turns agentic AI from a risk into a compliance helper that writes its own evidence binders while it fixes prod.

Implementation Roadmap for 2025 – 2026

Rolling out agentic AI doesn’t have to be a moon-shot. Treat it like any infrastructure upgrade: start small, prove value, then expand confidently.

6-Week Proof-of-Concept Checklist

| Week | Deliverable | Owner | Notes |

|---|---|---|---|

| 1 | Pick “pain-point” service (ideally high deploy volume, low compliance friction) | Platform lead | Tag backlog tickets that slow releases. |

| 2 | Stand-up sandbox repo + CI runners | DevOps | Mirror prod config minus customer data. |

| 3 | Configure tool registry & zero-trust scopes | Security | Grant read-only by default; whitelist only five write actions. |

| 4 | Add Planner, Coder, Test-Writer, Rollback agents | ML Engineer | Use BYO LLM keys or on-prem NVIDIA NIM container. |

| 5 | Run shadow pipeline, compare DORA metrics vs control | SRE | Capture deploy cadence, MTTR, toil hours. |

| 6 | Produce PoC report + cost model | Product Ops | Present to steering committee for go/no-go. |

Success Metrics & Executive Sign-Off

Executives won’t skim log files; they want scoreboard deltas. Bundle these agentic AI KPIs in your presentation:

| KPI | Baseline | Target to Green-Light |

|---|---|---|

| Deploy frequency | 5 / week | ≥10 / week |

| MTTR | 45 min | ≤15 min |

| Toil % | 35 % | ≤20 % |

| GPU cost / deploy | — | <$3 |

When at least two targets turn green and no Sev-1 regressions occur ask for cap-ex on a shared inference cluster.

Scaling Agents Across Multiple Pipelines

- Template the Registry. Store tool-permission YAML in a central repo; new teams fork and tweak.

- Memory Sharding. Create namespace-level vector stores so teams don’t pollute each other’s incident embeddings.

- Central Guardrail Hub. Route all prompts through one prompt-firewall cluster (Guardian AI) to enforce compliance globally.

- FinOps Tagging. Auto-label GPU spend per microservice and surface summaries in your FinOps optimizer agent Slack channel.

- Artifact Tiering. Push cold rollback snapshots to glacier-tier buckets and keep hot ones in standard-tier see our guide on artifact retention costs to keep storage bills tame.

By Month 6 your organization can graduate from a single-service experiment to a multi-pipeline agentic AI mesh that still obeys least-privilege boundaries.

Future Outlook: Quantum-Ready Agentic DevOps

Within a few years, agentic AI will no longer be an optional accelerator it will be the default operating layer for software delivery. Two trends in particular will amplify its impact.

Hardware Synergies: QPU + GPU in the Same Pipeline

IBM’s Quantum Roadmap projects fault-tolerant quantum processors by 2029. As “hybrid quantum-classical” workflows mature, planners inside a multi-agent crew will be able to route optimization tasks portfolio rebalancing, supply-chain logistics to a cloud QPU while ordinary CI jobs stay on GPUs. A single objective (“minimize deployment cost and latency”) could soon trigger both CUDA kernels and quantum circuits, all choreographed by the same planner–executor loop.

Skills & Org-Chart Implications

When agents own repetitive toil, platform engineers shift from button-pushing to policy design and agent-coach roles. Expect new job titles “Autonomous Systems Lead,” “Prompt Firewall Architect” and cross-training programs that pair SREs with ML engineers. For organizations already adopting agentic AI, the cultural leap resembles the early Docker era: teams that embrace it first will set the reliability bar everyone else must meet.

Conclusion & Next Steps

Multi-agent autonomy isn’t a moon-shot vision anymore; it’s shipping code that writes tests, enforces security policy, and rolls back bad deploys before Slack erupts. Early adopters document shorter release cycles, six-figure cost savings, and on-call rotations that finally feel humane.

If you’re ready to explore your first agentic AI pilot, start with the six-week checklist above, lock down your tool registry with zero-trust scopes, and keep an eye on GPU usage in the FinOps dashboard. For deeper dives into model selection and secure storage especially around artifact retention costs browse our related guides in the DevOps automation hub.