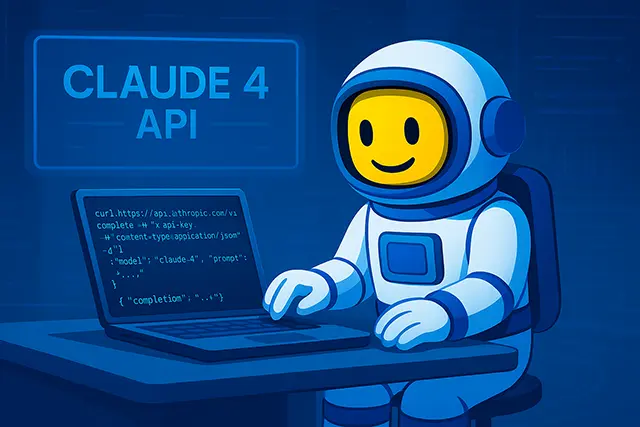

Claude 4 API Integration Guide: Build Smarter Workflows with AI

If you’re building modern automation pipelines or developer tools that need intelligence baked in, you’ve likely looked at OpenAI, Gemini, or Claude. But there’s something uniquely stable and safe about Claude 4. Unlike other models that sometimes go off the rails or require constant prompt wrangling, Claude 4 is remarkably predictable especially when used via API.

In this article, we’ll walk through how to integrate the Claude 4 API into your real-world workflow. Whether you’re an engineer building an internal dashboard, a startup automating customer support, or an ops team trying to summarize documents at scale, this guide is for you. And yes code included.

Table of Contents

What Is Claude 4 API and Why It Matters for Developers

Claude 4, developed by Anthropic, isn’t just a language model it’s a toolkit for smart automation. While many know Claude through chat interfaces, its API is where it truly shines for technical teams. Think of it less as “AI assistant” and more as an AI-powered logic engine for rewriting, summarizing, and aligning content to your business needs.

Where other APIs may require aggressive fine-tuning or filtering, Claude is built with safety at the core, thanks to Constitutional AI. This means developers can call the API and trust the output, even in complex, sensitive environments.

Claude 4 API Basics – Endpoint, Input, and Output Format

The Claude 4 API is accessed via a standard HTTPS POST request using JSON. It’s designed to be developer-friendly and minimal just send a well-structured payload, and you’ll receive a clean, predictable response.

The primary endpoint you’ll be using is:

https://api.anthropic.com/v1/complete

This is the core interface for all prompt-based completions. You interact with it by sending a POST request with headers and a JSON body containing your prompt and control parameters.

Here are the required components:

model: Name of the model to use. For Claude 4, use"claude-4".prompt: The actual instruction or input text you want processed.max_tokens_to_sample: The upper limit on how many tokens you want in the output.stop_sequences: An array of strings where generation should stop.

Claude’s Token Window Advantage for Workflow Context

One standout feature? Claude 4 supports 100,000+ tokens of context in a single call. That’s 5x what most competitors can handle.

This opens up use cases like:

- Parsing long documents

- Handling multi-part conversations

- Passing detailed customer history into one prompt

Claude doesn’t lose context halfway through. It remembers, processes, and responds coherently.

Real Use Cases: Where Claude 4 API Shines in Business Automation

Claude isn’t just another chatbot it becomes powerful when embedded into real processes. Below are some of the most practical business automation use cases we’ve seen.

AI-Powered Document Summarization in Internal Dashboards

Imagine your operations team receives 50-page PDF policy documents daily. You could route them into Claude via API, asking:

“Summarize the following document in 3 paragraphs, using plain English.”

The output can be piped directly into an internal tool like Notion, Airtable, or a custom dashboard.

Claude 4 in Automated Customer Replies via API

Let’s say a customer submits a ticket with a wall of text. Claude can condense that message and even write an empathetic, helpful reply.

Prompt example:

“You are a friendly support agent. Summarize the customer message, then reply in 2–3 polite sentences offering help.”

This can be called programmatically via webhook triggers in your CRM.

Claude for Tone & Language Correction in CMS Pipelines

If you’re working with user-submitted content blogs, listings, reviews Claude can rewrite text to fit your editorial standards:

Prompt:

“Rewrite this for clarity and professionalism, but keep the original intent.”

Perfect for CMS staging workflows in WordPress, Webflow, or internal tools.

How to Set Up Claude 4 API Step-by-Step

Let’s move from theory to execution. If you want to build an integration using Claude 4, the setup is refreshingly straightforward. In this section, you’ll walk through the exact steps to get your first API call running from getting your key to building your request.

Getting Your API Key from Anthropic

To use Claude 4 programmatically, you’ll need access to the official Claude API endpoint. Here’s how to get started:

- Go to console.anthropic.com:

This is Anthropic’s developer console where you manage API credentials and billing. - Sign up or log in:

Use a valid email and secure password. If you’re working in a team, create a separate service account for production integrations. - Navigate to the API Keys section:

After logging in, go to the left-hand menu and select API Keys. You’ll see a page listing any existing keys and a button to generate new ones. - Click “Create Key”:

Give your key a meaningful name likeproduction-backendorcontent-summarizer. Set permission scope if available. - Copy the API key shown:

This is the key you’ll use in thex-api-keyheader of every HTTP request. - Store it securely:

Do not hard-code this key into your app. Instead, save it in an environment variable,.envfile, or secure secret manager.- For local dev: use Python’s

dotenvor Node’sprocess.env - For cloud: use AWS Secrets Manager, GCP Secret Manager, or Vercel/Netlify environment variables

- For local dev: use Python’s

If you’re integrating Claude into a content generation system say, for rewriting or summarizing documents this same setup works with workflows like the ones covered in Claude 4 for Writers. And if you’re building support systems, check how tone management is handled safely in What Is Constitutional AI.

Example .env file:

ANTHROPIC_API_KEY=sk-live-xxxxxxxxxxxxxxxxxPython example using os.getenv:

import os

API_KEY = os.getenv("ANTHROPIC_API_KEY")

Important: Anthropic keys are sensitive credentials. Never expose them in frontend JavaScript, shared Google Docs, GitHub repos, or error messages.

Making Your First Claude 4 API Request (with Python)

Here’s a basic working script using requests:

import requests

api_key = "YOUR_API_KEY"

headers = {

"x-api-key": api_key,

"anthropic-version": "2023-06-01",

"content-type": "application/json"

}

body = {

"model": "claude-4",

"prompt": "Summarize this internal memo in two paragraphs.",

"max_tokens_to_sample": 300,

"stop_sequences": ["\n\n"]

}

response = requests.post(

"https://api.anthropic.com/v1/complete",

headers=headers,

json=body

)

print(response.json()["completion"])

Tip: Claude works best when you use natural, clear prompts.

Claude 4 API Integration with Make.com or Zapier

Not a Python developer? No worries.

You can call Claude 4 using HTTP modules in Make.com, Zapier, or Retool. Just set up:

- Method: POST

- URL:

https://api.anthropic.com/v1/complete - Headers:

x-api-key,anthropic-version,content-type - JSON body with

prompt,model, etc.

Hook it up to your Airtable, Google Docs, Notion, or webhook events from other platforms.

For visual builders, this makes Claude available to non-coders who still build real tools.

Claude 4 API vs OpenAI & Gemini: Which to Use for Your Product?

Let’s compare the 3 top contenders for business-ready LLM API work:

| Feature | Claude 4 | GPT-4 | Gemini 1.5 Pro |

|---|---|---|---|

| Context Window | 100K+ tokens | 32K tokens (limited) | 1M tokens (unstable) |

| Output Tone Control | Strong | Variable | Generic |

| Safety by Default | Constitutional AI | Requires filters | Less reliable |

| Pricing (est. USD) | Moderate | High | TBD / usage restricted |

| Prompt Consistency | High | Moderate | Varies |

| Dev Experience | Simple JSON | Slightly complex | UI-focused |

Claude is not the cheapest, but offers predictable, context-aware, and safe outputs critical for production apps.

Best Practices for Claude 4 API Integration in Production

Integrating Claude 4 into a production system requires more than just calling the API. Below are essential practices for making your implementation fast, fault-tolerant, and cost-efficient complete with code examples where relevant.

Caching Responses

If your prompts are frequently repeated or slightly varied, caching the response avoids unnecessary API calls. You can hash the prompt to create a unique cache key.

import hashlib

import redis

prompt = "Summarize this email thread for internal use."

cache_key = hashlib.md5(prompt.encode()).hexdigest()

cached = redis.get(cache_key)

if cached:

return cached

else:

response = call_claude_api(prompt)

redis.set(cache_key, response, ex=86400) # Cache for 1 day

return response

This approach works well in batch jobs, content processing queues, or UIs that allow reprocessing.

Handling Timeouts Gracefully

Even stable APIs like Claude can occasionally fail due to network issues. Always set a timeout and retry strategy in place.

import requests

import time

import random

def call_claude_with_retry(payload, max_retries=3):

for i in range(max_retries):

try:

response = requests.post(URL, headers=HEADERS, json=payload, timeout=10)

return response.json()

except requests.exceptions.Timeout:

wait = (2 ** i) + random.uniform(0.5, 1.5)

time.sleep(wait)

raise Exception("Claude API unavailable after multiple retries.")

Retry with exponential backoff reduces failure rates while being gentle on the API.

Prompt Templating

Standardizing prompts with variables makes your system easier to maintain and audit. Templates help reduce unexpected behavior from inconsistent instructions.

template = (

"You are a professional writing assistant.\n"

"Tone: {tone}\n"

"Please process the following input:\n\n{input}"

)

final_prompt = template.format(

tone="friendly but formal",

input="Please rephrase this message for our customer support page."

)

Store templates in your codebase, config files, or a remote prompt manager.

Logging and Auditing

Always log what’s going into and coming out of the Claude API. For example:

log_entry = {

"timestamp": time.time(),

"prompt_preview": prompt[:200],

"response_preview": response["completion"][:200],

"latency_ms": response_time,

"user_id": current_user.id

}

db.logs.insert_one(log_entry)

Mask or truncate user data when necessary, especially in multi-tenant SaaS environments.

Logging enables:

- Debugging weird output

- Reproducibility in tests

- Trust & transparency for end users or clients

Guardrails Using Stop Sequences

Without a defined end marker, Claude might continue writing beyond your desired output. Adding stop_sequences ensures generation ends where you want it to.

{

"model": "claude-4",

"prompt": "Summarize this in 3 concise sentences:\n\n[INPUT]",

"max_tokens_to_sample": 200,

"stop_sequences": ["###", "[END]", "\n\n"]

}

Use [[END]] in your prompt and set it as a stop token if you’re building complex, multi-turn logic or need strict output limits.

Final Thoughts: Claude 4 API as a Developer-Ready AI Partner

Claude 4 is the kind of tool devs love: reliable, clean, and safe. Unlike models that require constant wrestling, Claude “just works” especially in structured workflows and content pipelines.

If you’re a developer or automation lead looking for an API that summarizes clearly, respects tone, and won’t go off-script, Claude 4 is a top-tier pick.

And once you’ve integrated it once, you’ll find new places to plug it in: onboarding, HR docs, email generation, policy summaries, CMS cleanup, and more.

Claude 4 API isn’t about generating text it’s about enhancing logic in your systems.